DeepFake

The focus of this module is the staged development of a critical research essay investigating a chosen aspect of VFX. This will be an extended piece of written work equivalent to a minor dissertation. Lead by a question that you develop, the aim of this module is to encourage you as VFX practitioners to conceptualise and theorise your process and products in greater depth. The essay assignment will be a small research essay and it will involve the planning and implementation of both practical and contextual research from which findings will be evidenced, reflected upon, and analysed in the written parts of the essay.

The DeepFake is a piece of tech invented in the '90s. The technology behind it has evolved until the point that we can use it in the cinema. It is an AI, and artificial intelligence, the software will analyze the images and videos provided to it for the creation of the face. Like in Mandalorian, with the famous Luke Skywalker making an appearance in the last episode. Lucasfilm began the process of working with deepfake technology, which involves feeding in as many images and video clips of a person into a computer as possible. The computer AI (Artificial Intelligence) is then able to learn a lot about a person's face, and how it moves when they talk or make a facial expression such as smiling and can then digitally copy them. In addition, Lucasfilm decided it combine the actions of the original Luke and his double filmed on set plus the takes inside of The EGG, where they will collect high-resolution images of the actor. All this made it possible to get Luke skywalker from 1983.

Example 1

As a result of this piece of technology, the CGI will be used up to a level to full fill the perception of the new character created by the AI, this process won't be cheaper though, as the AI use and algorithm that is getting updated constantly. The deepfake will make actors look younger using images from their youth years. This may be the road to finally brake the uncanny valley feeling with actors recreated fully CGI.

References:

The Mandalorian: Luke Skywalker deepfake technology ‘could be used in really bad ways’ - CBBC Newsround. (n.d.). www.bbc.co.uk. [online] Available at: https://www.bbc.co.uk/newsround/58340104.

Bruce Willis denies selling rights to his face. (2022). BBC News. [online] 2 Oct. Available at: https://www.bbc.co.uk/news/technology-63106024 [Accessed 8 Oct. 2022].

Examples of the Deepfake:

Developing ideas, case Studies, and project examples.

During week 2 we were looking at the assessments from the previous years. Using these assessments as examples of how we should write our own essays, we also have these previous assessments to compare and analyse our ideas and where we should be aiming.

What is the question, can you outline the aims and objectives?

Does the photogrammetry of props convey realism for film scenes and contribute to the mise en scene?

-

Focused on photogrammetry.

-

Definition of Photogrammetry and reality capture.

-

Making a comparison between traditional 3D modelling and photogrammetry and areal scanning.

-

Where in the pipeline photogrammetry is used as a technique to get the data for the scene.

-

How this data is used until you see it on the screen.

-

Using findings from practical research.

-

Comparison between different movies and on different scenes where photogrammetry was used as a technique to recreate the CGI

Can you describe the method used for the practical research?

-

For a practical example, the student has used his own iPhone 12 to scan a pair of shoes, and used a Metashape, to build 3 models of his scan.

What were the findings of the practical and/or theoretical research?

-

The theoretical research was made at the beginning of the essay where the student has to compare the different techniques used in the different movies. The practical was a really nice example because using his own phone and i guess making a bit of research on how photogrammetry work, he got a bit of the shoe in the software, however, the photoscan is not really well done, missing some areas of interest at the front of the shoe.

What was the student's argument?

-

The student is happy with the results of his scans even though the scans look incompleted, saying that he could also get some parts of the floor and the door, also said that to be used in movies needs to evolve to catch more of the viewer's eyes.

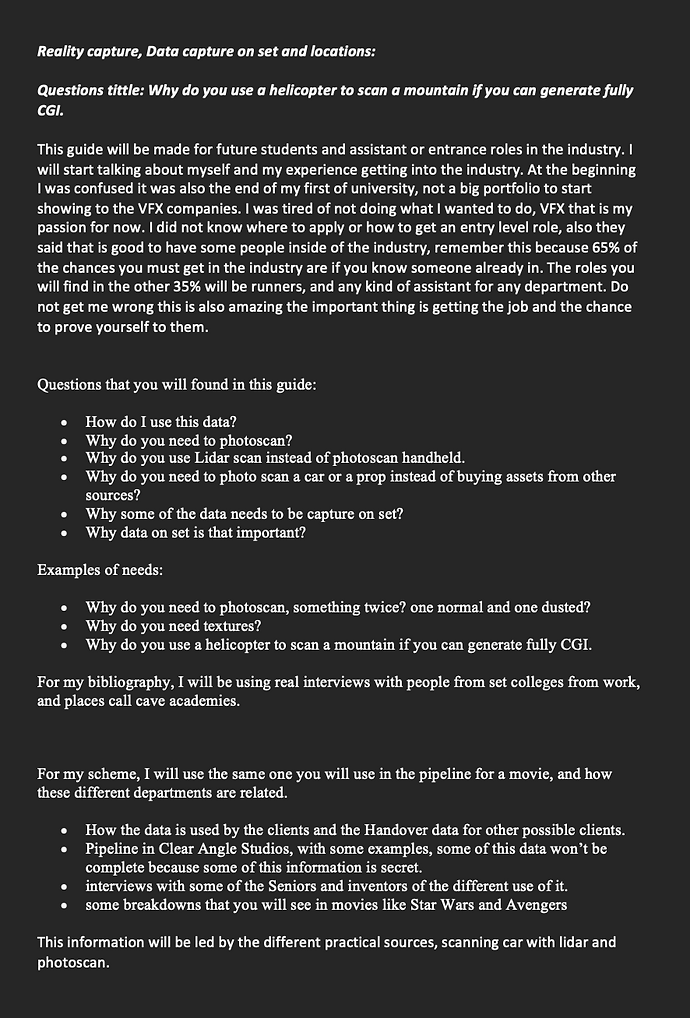

My idea for this project is to create a guide for capturing data within the pipeline. Based at Clear Angle studios I have the opportunity of using the equipment to create and discuss step by step how this data is captured and used by vendors, of course, part of this research will be not revealed, because some areas of the process are secrets. I will be using mostly practical effects and I will be explaining how this data is captured on set too, this will be also an introduction to data wrangling.

Part of this guide or research will be the different obstacles that you will face in the way of photogrammetry and data capturing.

Designing a question and writing a proposal.

What's in the Proposal:

500-600 words:

•A clear focused title or research question

•A list of searchable Keywords

•A Introduction to the Investigative Study

•Including the aims and objectives

•A Methodology or list of five key sources (References) – these should be annotated

•Any important images as figures

Title of my essay:

My capture data guide and how it is linked to every part within the pipeline.

Questions that you will found in this guide:

-

How do I use this data?

-

Why do you need to photoscan?

-

Why do you use Lidar scan instead of photoscan handheld.

-

Why do you need to photo scan a car or a prop instead of buying assets from other sources?

Examples of needs:

-

Why do you need to photoscan, something twice? one normal and one dusted?

-

Why do you need textures?

-

Why do you use an helicopter to scan a mountain if you can generated fully CGI.

For my bibliography, I will be using real interviews with people from set colleges from work, and places call cave academies.

For my scheme, I will use the same one you will use in the pipeline for a movie, and how these different departments are related.

-

How the data is used by the clients and the Handover data for other possible clients.

-

Pipeline in Clear Angle Studios.

-

interviews with some of the Seniors and inventors of the different use of it

-

some breakdowns that you will see in movies like StarWars and Avengers

This guide will be made for future students and assistant or entrance roles in the industry. I will start talking about myself and my experience getting into the industry. At the beginning I was confused it was also the end of my first of university, and not a big portfolio to start showing to the VFX companies. I was tired of not doing what I wanted to do, VFX that is my passion for now. I did not know where to apply or how to get an entry-level role, also they said that is good to have some people inside of the industry, remember this because 65% of the chances you must get in the industry are if you know someone already in. The roles you will find in the other 35% will be runners, and any kind of assistant for any department. Do not get me wrong this is also amazing the important thing is getting the job and the chance to prove yourself to them.

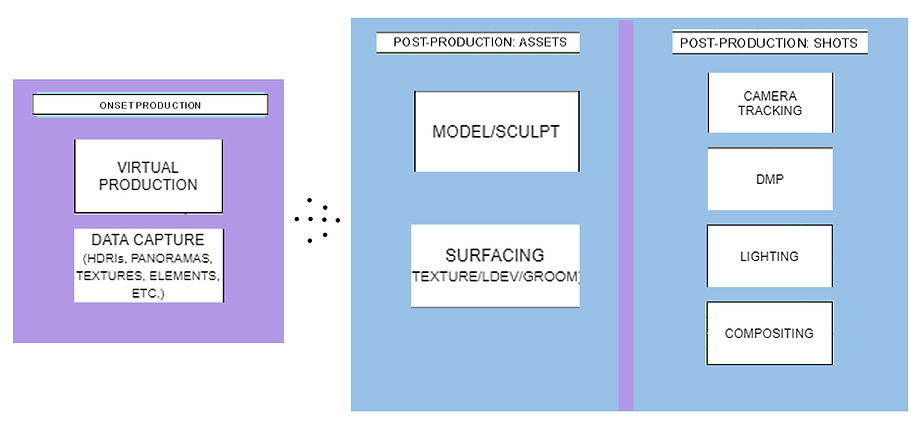

The Capture graphs

This graph I made; shows how the data we capture at Clear Angle studios is use for. I talk from experiences, worked on two of the 3 different departments we have at CAS, Capture, and Lidar. Recently I finished my training on the processing side, what makes easier for me to know how the data captured on set and in the rigs used.

Methodologies and Literature Reviews

Interviews questions:

Questions for Ross Martin/ Position: Senior Lidar Technician.

-

Why is it so important to capture data on set?

-

How is the data used?

-

Why do you use a Lidar scanner instead of photoscan the assets handheld? Give an example

-

Why do some of the data need to be captured on set?

-

Why do we need textures for?

-

Why do we do HDRIs and Panos?

-

Could the Lidar scanner be used for light positioning and camera tracking?

-

Why every time something moved on set, do we need to scan again?

Questions for Jack/ Position: Specialist Capture Technician.

-

What is Dorothy?

-

How is it works?

-

What are the differences between Dorothy and other Rigs from Clear Angle Studios.?

-

What are the differences between photogrammetry and photometric?

-

What can you do with directional lights? What is the result?

-

How important is this data to build with accuracy a 3D face of a character?

Questions for Matt/ Position: Head of Capture.

-

Why will you shoot from a helicopter instead of recreating the entire scene with CGI?

-

Do you think in the future we will use just photogrammetry and photometric to build 3D assets and not 3D modeling as in not using photogrammetry as a tool or reference for 3D modeling?

-

Why not use a Lidar scanner instead of Photogrammetry?

-

Why is it Photometric?

Questions for Jonty/ Position: Capture Specialist.

-

How was working for one of the most exciting scenes in Solo?

-

How is scanning from a helicopter?

-

How can you assure the scan is going to come out well to build a 3D model of the mountain?

-

Why did you need to scan the mountain from the helicopter and not use a drone?

From personal experience:

Questions I will answer myself from my knowledge and experience supported by other professionals, that had worked on set and in-house.

-

Why do you need to photo-scan something twice? One normal and one dusted?

-

Arm scanning how is it works?

-

What does a Data wrangler dose on set?

Experience on Set, and examples from tasks that I have been given to scan on set and for what reason.

Vfx Data Wrangling:

DATA WRANGLING:

VFX Data Wrangler description:

Who is the VFX data Wrangler? It will be the person how manage the VFX shoots data, it must pay attention to detail, label files accurately, wrangle the data without loss, notice corruptions must have knowledge of digital cameras and computers, understand cameras, file formats and storage media. In addition, problem solving be able to fix kit, tech, and cable connections. Data wranglers work with the camera operators and directors of photography. They also communicate with the teams in post-production and with the production coordinators who create a log for the production team back in the office. In some instances, they work directly for the digital imaging technician.

Every shoot that is on camera is potentially a VFX shoot.

-

Take pictures of the scene.

-

Make note of the scene.

-

Make note of the camera lenses.

Record camera data of both planned and unplanned VFX shots, including reference photos, witness camera, color chart, camera details, place markers and capture HDRIs. Set up, operating, and maintenance of all on-set VFX gear on a day-to-day basis.

Uploading, logging and backup of all on-set data each day on-set. Backup and distribution of on-set VFX data, photographs, and videos to appropriate recipients, following in-house protocols.

Understanding of and ability to draw lighting diagrams/set-up diagrams, including measurements.

Even a close-up of a face can has a VFX shot, maybe you will need to clean-up something on the face or add something on it later.

The art department will be a good ally, you may need something like tape or a piece of wood to mark a spot on the scene that it will need to be visible later for the vfx shoot. The shoot equipment for a vfx data wrangler must be the best to obtain the best data.

A good tool for a data wrangler will be the app is Setellite, it is a premium on set VFX organizer for supervisors and other related post-production professionals. Setellite enables you to collect and register al you’re on-set vfx data in an organized and effective way. Designed to be use during film or television shoot. Setellite also helps you to keep track of all info as weather, location, take, plate, and references data on a per slate basis. All this can be exported to a PDF to share with editors, post-production supervisors, vfx supervisors and vfx artists.

VFX DATA WRANGLER & VFX SUPERVISORS TASK:

-

TRACKING.

-

HDRI.

-

BLUE/LUMA GREEN.

-

CAMERA ON SET (settings and any kind of adjustment in camera after the take).

-

FRAME RATES.

-

MOTION CONTROL.

-

PREVIS.

-

SHOOT REQUIRING, MASSIVE AND CROWD PLATES.

-

DISTANCE TO THE CAMERA.

-

DISTANCE TO THE LENSS.

-

REEL.

VFX Data Wrangler equipment:

-

Two 5D canons camera (the camera can be any camera with a good resolution and a good pixel rate).

-

Laptop, to keep all your files organized.

-

iPad (with the Settellite app).

-

Tracking markers. There are many different types of trackers, you could also make your own ones, but it is better to follow the standards in the industry.

-

Tape of any kind and color.

-

Laser measure it can be a Leica or Boss, this laser will measure the distance from one point to another, for example from the position where the 3D asset will be in the scene to the camera position. You can measure the distance of the lights; these measurements can be applied in the 3D software to replicate the same parameters in 3D space.

-

8mm for the HDRI.

-

Tripod, with a rotatable ball head so you can shoot flat texture of the floor, or something located on the floor.

-

A Ninja Nodes this will be useful for your PANOS.

-

Ping pong balls/ Tennis balls.

-

Sharpies.

-

Lens distortion grid.

-

A Macbeth (color chart).

-

Chrome ball.

-

Grey ball.

-

Real textures and references that the art department may had created so the vfx team have some more data to work with, the type of textures can be hair or and animal skin. This can be filmed in front of the camera as references to see how it will be affected by the weather and lights.

-

Tape measure.

-

GoPro cameras as witness cameras with attached to lights stands.

-

Lenses: 24mm, 50mm, 100mm. and just in case 24 to 105 mm.

-

SSD cards.

-

Batteries.

-

Chargers.

-

HDD drives and SSD drives.

-

Trolley.

Some Data wrangles will bring on set to a Lidar Scanner and mine studios set-up to do photogrammetry on props. This will depend on the budget of the production.

Practical Test:

Phase 2.

Practical Test:

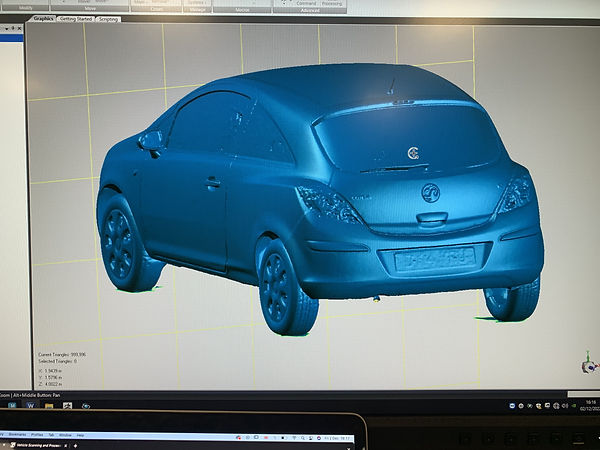

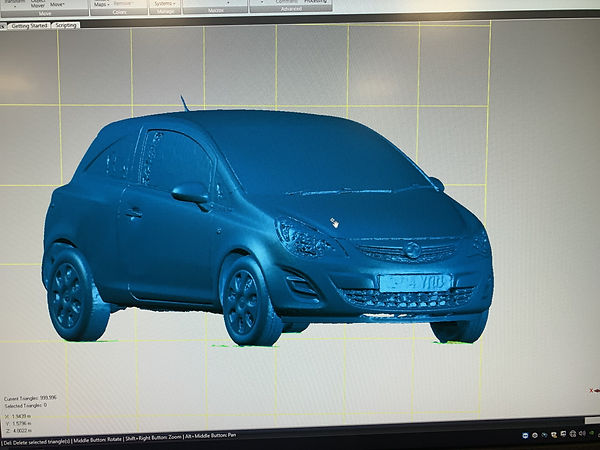

Lidar scan of a car. It is common for us to scan all types of vehicles, from small cars to helicopters and jumbo jets.

The ideal scanning environment would be a stage with a solid floor and a minimum distance around the vehicle of 5 meters. In an interior scan, the vehicle will have minimal movement (outside, the wind will play a role). Consider scanning in this environment if possible and somewhere where people will not try to enter or exit the vehicle during the scan. Keep an eye out and stop people approaching the vehicle during scanning.

Consider how you will register the data if you are scanning outside (e.g. can you use nearby buildings to register the scans together) and ideally use targets so that registering scans will be faster and easier. In addition, if you are working outside, make sure the vehicle is parked in a good position (e.g. not under a tree).

When scanning outside, the weather also has a significant impact. Keep an eye on it throughout the day, and if it starts to get windy, note it in the shoot log. Windy conditions may also make elevated scans (those on stands) impractical, so inform production that it might not be possible to capture the tops of the vehicles.

Due to a laser's physical constraints, lidar scanners typically have trouble handling dark, glossy objects. Leica's RTC scanners are better than most scanners in handling dark surfaces, but they still fall short of where we would like them to be. Lidar scanners are a line-of-sight device that provide point data using the returning laser data. If you point the laser at a transparent, reflective, or dark (almost black) surface, the laser will be refracted, reflected, or absorbed, and the scanner will not receive the signal returned. This means that data for that area will not be collected.

If you have a black car (or a very shiny car), you should powder the car first before scanning. If they ask you to scan a black car, it's important to ask if the problem can be addressed and dusted off. If the car is a hero car, it may mean it is brought back for scanning after being used on set.

Lidar scan of any area. Environmental data collection can be performed using a variety of remote sensing techniques. Lidar is one type, an active system that uses light (in the form of laser pulses) to calculate distance. A LIDAR unit has a laser reflected by a rotating mirror, which sprays the laser beam onto the surrounding area and calculates distance based on phase or time of flight. This happens so fast that it can collect up to 2 million points per second and have a range of up to 1 km.

The Lidar scanner captures data in a 360 degree sphere from any setup, with a range of up to 1km (Leica P50) (120m for RTC 360). Lidar technicians need to move the scanner around and around the set to ensure it captures dense point clouds, covering all angles and ensuring the final model is occlusion-free. To achieve this, we have multiple setups at different heights for maximum coverage.

When registering scans, you should ensure that there are enough overlap points between scans for accurate registration. To do this, you need to think about which scans to link in your set and make sure there is enough duplicate data between them. Imagine that each scan is a piece of a jigsaw puzzle, and that each piece should have an overlap (at least 25%) so that when you put them all together to complete the picture, you know where each piece will fit. please. Before using lidar to scan a set or location, it's a good idea to walk around the area and plan a route to collect data as efficiently as possible. Ideally, you should move through the set in a logical process rather than jumping. This makes registration much more difficult and increases the chances of lost space as you don't know where you recorded from.

Important steps for LIDAR scanning:

-

Walk through the set and make a planned route of where to scan from.

-

Place tripod on firm ground and level the scanner. ALWAYS LEVEL THE SCANNER as these speeds up the processing and helps with the accuracy of the registration.

-

Check to make sure there is enough overlap from the last scan.

-

Plan next position to ensure overlap.

-

Set correct settings and then scan from that position.

Photo scan of old nurse house including textures that I followed.

-

Manual Photo scanning for props and sets:

-

Start with your Macbeth (color chart) chrome and grey balls.

• Make sure the entire prop is in the frame and you can see the color chart and the chrome/grey sphere from the front.

• Make sure the meter is not cluttered by the background and expose the props accurately. This is always a problem except on 11a where all the walls are white. Return to the measurement section for how to deal with this.

• Enclose it in parentheses. Once you have half the ball, take two more shots and spin the ball to capture the other side.

2) 8 bracket texture round.

• Change the camera's folder to keep the textures you want to capture separate from the reference capture you just captured.

• Move around the prop and capture 8 sets of images. If the chrome/gray ball blocks the prop, remove it for the rest of the shot.

• As you move around your props, be aware that you may need to adjust your shutter speed depending on the texture of what you're looking at and changing lighting conditions.

3) Photoscan - Wide

• The camera must be set to single frame, as photoscan data does not need to be bracketed.

• Circle the prop from the same frame as the texture. There are no hard and fast rules about how many frames or how many spins you need. It depends on the complexity of the prop. If you need guidance, prop rigs are designed to acquire very complex objects and take shots in 8 increments every 45 degrees, so pick from there if needed.

• Note that narrow prop corners should capture more frames, and wide flat surfaces should capture fewer frames. • If your prop is not high, you can also use this lens to do low-pass and high-pass from that distance. If your current lens has a prop that's too big to get through the high pass, you can switch to a shorter one to get a better angle.

4) Photo Scan - Tight

• You need to get up close to capture details or partially recapture the entire prop if wide angle shots do not provide enough resolution.

• If the prop is large, switch to a longer lens and take two more shots to capture more detail in the top and bottom halves of the prop (keeping the 30% overlap of course).

• Difficult parts of the prop should also be covered. B. Tops of tall props or complex surfaces that are not captured from a horizontal perspective. Don't forget to get quality textures in all areas of your prop.

• When performing close-up photo scanning, take this opportunity to ensure that the scale markings are well covered. We need 3 images with both markings and focus in the frame to match the rest of the prop.

5) Texture

• You need to capture the bracket texture from the following locations.

• Full screw, 8 turns in 45 degree increments.

• Top and bottom halves of the support, 8 turns (if the support is big enough)

• Flat textures on any plane

• The top and bottom of the support should be as flat as possible.

• All areas with intricate detail.

Lidar scan of any area. Environmental data collection can be performed using a variety of remote sensing techniques. Lidar is one type, an active system that uses light (in the form of laser pulses) to calculate distance. A LIDAR unit has a laser reflected by a rotating mirror, which sprays the laser beam onto the surrounding area and calculates distance based on phase or time of flight. This happens so fast that it can collect up to 2 million points per second and have a range of up to 1 km.

The Lidar scanner captures data in a 360 degree sphere from any setup, with a range of up to 1km (Leica P50) (120m for RTC 360). Lidar technicians need to move the scanner around and around the set to ensure it captures dense point clouds, covering all angles and ensuring the final model is occlusion-free. To achieve this, we have multiple setups at different heights for maximum coverage.

When registering scans, you should ensure that there are enough overlap points between scans for accurate registration. To do this, you need to think about which scans to link in your set and make sure there is enough duplicate data between them. Imagine that each scan is a piece of a jigsaw puzzle, and that each piece should have an overlap (at least 25%) so that when you put them all together to complete the picture, you know where each piece will fit. please. Before using lidar to scan a set or location, it's a good idea to walk around the area and plan a route to collect data as efficiently as possible. Ideally, you should move through the set in a logical process rather than jumping. This makes registration much more difficult and increases the chances of lost space as you don't know where you recorded from.

Important steps for LIDAR scanning:

-

Walk through the set and make a planned route of where to scan from.

-

Place tripod on firm ground and level the scanner. ALWAYS LEVEL THE SCANNER as these speeds up the processing and helps with the accuracy of the registration.

-

Check to make sure there is enough overlap from the last scan.

-

Plan next position to ensure overlap.

-

Set correct settings and then scan from that position.

Photo scan of old nurse house including textures that I followed.

-

Manual Photo scanning for props and sets:

-

Start with your Macbeth (color chart) chrome and grey balls.

• Make sure the entire prop is in the frame and you can see the color chart and the chrome/grey sphere from the front.

• Make sure the meter is not cluttered by the background and expose the props accurately. This is always a problem except on 11a where all the walls are white. Return to the measurement section for how to deal with this.

• Enclose it in parentheses. Once you have half the ball, take two more shots and spin the ball to capture the other side.

2) 8 bracket texture round.

• Change the camera's folder to keep the textures you want to capture separate from the reference capture you just captured.

• Move around the prop and capture 8 sets of images. If the chrome/gray ball blocks the prop, remove it for the rest of the shot.

• As you move around your props, be aware that you may need to adjust your shutter speed depending on the texture of what you're looking at and changing lighting conditions.

3) Photoscan - Wide

• The camera must be set to single frame, as photoscan data does not need to be bracketed.

• Circle the prop from the same frame as the texture. There are no hard and fast rules about how many frames or how many spins you need. It depends on the complexity of the prop. If you need guidance, prop rigs are designed to acquire very complex objects and take shots in 8 increments every 45 degrees, so pick from there if needed.

• Note that narrow prop corners should capture more frames, and wide flat surfaces should capture fewer frames. • If your prop is not high, you can also use this lens to do low-pass and high-pass from that distance. If your current lens has a prop that's too big to get through the high pass, you can switch to a shorter one to get a better angle.

4) Photo Scan - Tight

• You need to get up close to capture details or partially recapture the entire prop if wide angle shots do not provide enough resolution.

• If the prop is large, switch to a longer lens and take two more shots to capture more detail in the top and bottom halves of the prop (keeping the 30% overlap of course).

• Difficult parts of the prop should also be covered. B. Tops of tall props or complex surfaces that are not captured from a horizontal perspective. Don't forget to get quality textures in all areas of your prop.

• When performing close-up photo scanning, take this opportunity to ensure that the scale markings are well covered. We need 3 images with both markings and focus in the frame to match the rest of the prop.

5) Texture

• You need to capture the bracket texture from the following locations.

• Full screw, 8 turns in 45 degree increments.

• Top and bottom halves of the support, 8 turns (if the support is big enough)

• Flat textures on any plane

• The top and bottom of the support should be as flat as possible.

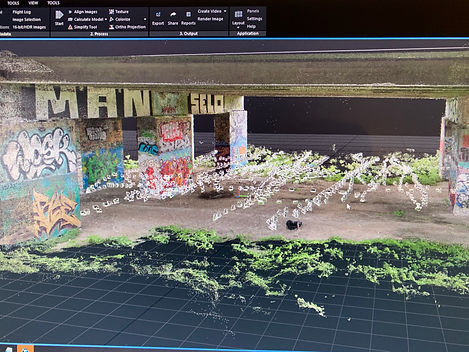

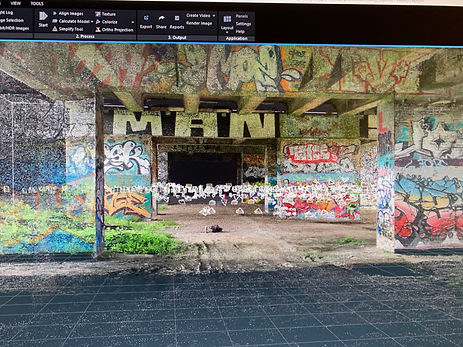

Underpass Photoscan

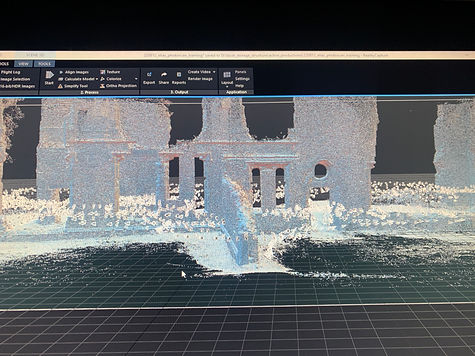

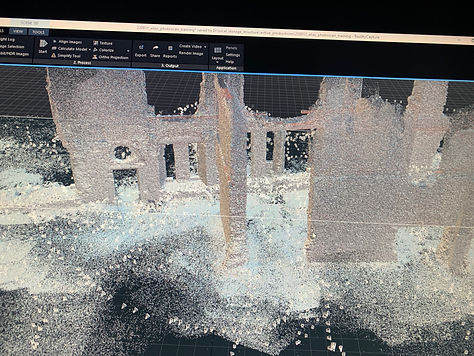

Old nurse house Photoscan

Panos

PANOS good and bad examples, capturing panoramicas.

A Pano is a collection of photographs that can be stitched together to create a high-resolution image covering a wider angle or a complete 360 degree ‘bubble’.

The roundshot is a machine that will capture a 360 degree ‘bubble’ automatically which can allow other data to be captured at the same time. (See roundshot section).

Nodal Ninja/Pano Capture

Before you go on a shoot, ensure that you have calculated the nodal/no parallax point of each lens you will be using. In most cases you should have the minimum of a 50mm, 35mm and 24mm. The 35mm and 24mm will be the lenses you will use the most in conjunction with a canon 5Ds or 5Dsr and a Mk2 or Mk3 Nodal ninja. (For using a Roundshot with the kit, the nodal points have been worked out. With nodal ninja’s this varies depending on the version you have but some have been worked out, it is always best to check the nodal point before heading out to a shoot). Always shoot each pano in a new separate folder and do not forget to put a color chart.

Good Pano Example: outside of my office

Bad Pano Example: outside of my office

Capturing textures. All texture photos require a flat light. If you can only shoot on sunny days, you'll need to know the orbit of the sun.

Using a sun pathfinder like Helios will show you the trajectory throughout the day and allow you to determine which areas of the environment/set will be in shadow throughout the day. This allows me to shoot the set in the flattest light possible.

Most of the time you don't have enough time to work in the sun or wait for the clouds to come over. So do the best you can. Always watch the weather.

If you need to shoot a set in a studio, having the set all to yourself is preferable, but not always possible. Flat lights from sky panels or space lights work well but need to be organized beforehand by the VFX coordinator or assistant coordinator.

If fixed lighting is not available, the only option is to use the lighting in your home. The lighting in the studio house is not very bright, which slows down the work and lengthens the time it takes to shoot the set. In some cases, you may be asked to shoot on set under the same lighting conditions as the main shoot. This may be intended for 2.5D projection and Rotonis work rather than a full CG reconstruction. In such situations, try to get a color map for all the different light sources.

Phase 3:

How is some of the data used? Paintout, when filming with a crew of over 200 people, sometimes things can appear in the shot or location that is not noticed or not required. This can be coffee cups, cables, equipment etc and these things will need painted out of the frames. We capture texture reference photography to aid in this task.

Full CG Re-construction - When a film set is partially built or the use of lots of green screen, due to restrictions in cost and space, a lot of the time the set will need to appear bigger / longer / better in the final production. Using our scan and photography data gives VFX artists everything they require to extend and build sets in CG to whatever size/shape they require for the final product.

CG creatures - If you have computer generated animals/people/creatures running around in the scene being filmed, our lidar data is used for tracking purposes so VFX artists can accurately move the CG assets over the same terrain and objects, so they appear more realistic.

Light and camera position reference - A lidar scan has a range of 130 meters, so capturing positions of lights to replicate light direction in post-production is requested frequently. Also, if the position of a camera is requested, we can accurately use the lidar scan to measure the height and distance from the camera to any object or space required, again - to replicate camera moves in post-production if required.

Generally - If something can't be done in real-life due to health and safety, logistics or if something appears impossible - our data can be used to create the impossible through Visual Effects and CGI.

References:

Martin, R. (2022). Why do you use a helicopter to scan a mountain if you can generate fully CGI. [online] 25 Nov. Available at: https://poelias117.wixsite.com/eliasrodrsketchbook/copia-de-environmental-fx [Accessed 25 Nov. 2022].

www.facebook.com. (n.d.). Creating Vandor’s Train Heist Sequence - Solo: A Star Wars Story | For the Vandor train heist in #Solo, an articulated CG train was built and driven through a mountain landscape derived from photogrammetry of the Italian... | By Industrial Light & Magic | Facebook. [online] Available at: https://www.facebook.com/watch/?v=353168948615497 [Accessed 23 Nov. 2022].

Arts, E. (2016). Photogrammetry and Star Wars Battlefront - Frostbite. [online] Electronic Arts Inc. Available at: https://www.ea.com/frostbite/news/photogrammetry-and-star-wars-battlefront.

Bowler, M. (2022). : Why do you use a helicopter to scan a mountain if you can generate fully CGI. [online] 22 Nov. Available at: https://poelias117.wixsite.com/eliasrodrsketchbook/copia-de-environmental-fx [Accessed 22 Nov. 2022].

Other interviews:

Questions for Matt/ Position: Head of Capture.

-

Why will you shoot from a helicopter instead of recreating the entire scene with CGI?

-

Do you think in the future we will use just photogrammetry and photometric to build 3D assets and not 3D modeling as in not using photogrammetry as a tool or reference for 3D modeling?

-

Why not use a Lidar scanner instead of Photogrammetry?

-

Why is it Photometric?

LinkedIn profile for Matt Bowler.

1-Why would you choose to generate an asset using photogrammetry from a helicopter or drone rather than recreate the entire scene using CGI?

Capturing a real world asset by the means of photogrammetry, whether that be from a helicopter, drone or ground capture, is a far faster and more accurate way of producing photo-real assets compared to generating assets from scratch by the means of modelling and texturing. It would take an artist(s) far longer to create a photo-real asset compared to capturing one by the means of photogrammetry. Photogrammetry also has the added benefit of having camera positions embedded into the data which can be used to project the captured textures back on to an asset it if was retopologised for use in a game engine for example.

Some limits to capturing assets using photogrammetry is of course limited to objects / environments that physically exist and are accessible and is also limited to materials / surfaces that scan well.

2- Do you think in the future photogrammetry and photometrics will be used more widely to generate 3D assets? For example, artists won’t create 3D model real world assets from scratch?

With platforms like the Metaverse pushing the boundaries of asset realism and engines like Unreal being able to load in extremely poly-heavy assets, the benefits of using assets captured via photogrammetry is only going to expand. Even companies like Apple are implementing photogrammetry software into their 'AR Kit / Reality Kit’ for the generation of photogrammetry data.

3- Why not using LiDAR Scanner for everything instead of photogrammetry?

Whilst LiDAR scanning has its place where millimetre accurate scale is a requirement, photogrammetry has the added benefit of texture acquisition alongside geometry meaning that assets captured via photogrammetry can be pushed further down the pipeline quicker. LiDAR scanning only works for static objects like sets / environments / large props and cannot capture subjects with movement (human for example).

Presentation/Workshop